Functional Requirements

-

Viewers' Requirements:

- Immediate video playback upon request

- Consistent viewing experience regardless of traffic spikes

- Compatibility across different devices and network speeds

-

Creators' Requirements:

- Straightforward video upload URL procurement

- Efficient file transfer to the server for various video sizes

- Confirmation of successful upload and encoding

Non-Functional Requirements

- 100M Daily Active Users

- Read:write ratio = 100:1

- Each user watches 5 videos a day on average

- Each creator uploads 1 video a day on average

- Assuming that each video is 500MB in size.

- Data retention for 10 years

- Low latency

- Scalability

- High availability

Capacity Planning

We will calculate QPS and storage using our Capacity Calculator.

QPS

QPS = Total number of query requests per day / (Number of seconds in a day)

Given that the DAU (Daily Active Users) is 100M, with each user performing 1 write operation per day, and a Read:Write ratio of 100:1, the daily number of read requests is: 100M * 100 = 10B.

Therefore, Read QPS = 10B / (24 * 3600) ≈ 115740.

Storage

Storage = Total data volume per day * Number of days retained

Given that the size of each video is 500MB, and the daily number of write requests is 100M, the daily data volume is: 100M * 500MB.

To retain data for 10 years, the required storage space is: 100M * 500MB * 365 * 10 ≈ 183EB.

Bottleneck

Identifying bottlenecks requires analyzing peak load times and user distribution. The largest bottleneck would likely be in video delivery, which can be mitigated using CDNs and efficient load balancing strategies across the network.

When planning for CDN costs, the focus is on the delivery of content. Given the 100:1 read:write ratio, most of the CDN cost will be due to video streaming. With aggressive caching and geographic distribution, however, these costs can be optimized.

High-level Design

The high-level design of YouTube adopts a microservices architecture, breaking down the platform into smaller, interconnected services. This modular approach allows for independent scaling, deployment, and better fault isolation.

Read Path: When a user initiates a request to view a video, this request is processed by the load balancer and API Gateway, which then routes it to the Video Playback Service. This service efficiently retrieves video data through caching layers optimized for quick access, before accessing the Video Metadata Storage for the video's URL. Once retrieved, the video is streamed from the nearest CDN node to the user's device, ensuring a seamless playback experience.

- The Content Delivery Network (CDN) is crucial for delivering cached videos from a location nearest to the user, significantly reducing latency and enhancing the viewing experience.

- The metadata database is responsible for managing video titles, descriptions, and user interactions such as likes and comments. These databases are optimized to support high volumes of read operations efficiently.

Write Path: Video uploads are handled through a distinct process that begins with the load balancer and API Gateway, directing the upload request to the Video Upload Service.

- Generating Signed URLs: The Video Upload Service obtains a signed URL from the Storage Service, which facilitates direct access to the object storage systems (e.g., Amazon S3, Google Cloud Storage, or Azure Blob Storage). A signed URL contains a cryptographic signature, granting the holder permission to perform specific actions (like uploading a file) within a designated timeframe.

- Direct Upload to Object Storage: The client application receives the signed URL and uses it to upload the video file directly to the object storage. This method bypasses the application servers, considerably reducing their workload and enhancing the system's overall scalability.

- Upload Completion Notification: Following a successful upload, the client notifies the Video Upload Service, providing relevant video metadata. This triggers subsequent processes such as video processing (transcoding), thumbnail generation, and metadata recording.

- Video Processing Pipeline: The upload event initiates a series of processing tasks, including content safety checks (to identify any prohibited content), transcoding (to convert the video into different formats, resolutions, and compress the file), and resolution adjustments.

- Distribution to CDNs: The final step involves uploading the processed videos to CDN nodes, ensuring that they are readily accessible to viewers from the most efficient locations.

Detailed Design

Data Storage

Video Table

+------------------+--------------+-----------------+

| video_id (PK) | uploader_id | file_path |

| title | description | encoding_format |

| upload_date | thumbnail | duration |

+------------------+--------------+-----------------+

Users Table

+------------------+-------------+--------------+

| user_id (PK) | username | email |

| password_hash | join_date | last_login |

+------------------+-------------+--------------+

Video Stats Table

+------------------+------------+-------------+------------+

| video_id (FK) | views | likes | dislikes |

| shares | save_count | watch_time |

+------------------+------------+-------------+------------+

Explanation of each table:

- Video Table: This table stores metadata about each video, such as the video ID, uploader, storage path, and title. Title and description help in search and discovery, while file_path and encoding_format are vital for playing the video.

- Users Table: Retains data on registered users, including secure login information and activity logs for an enhanced, personalized experience.

- Video Stats Table: Crucial for content creators and recommendation algorithms; this table tracks video engagement and informs popularity metrics.

For database sharding, selecting the shard id typically depends on data distribution and access patterns. Videos may be sharded by video ID or uploader ID to balance the load among shards; users are often sharded by user ID for even distribution.

Database replication is another critical consideration for enhancing data availability and fault tolerance. Read replicas support high read volumes, especially on workloads skewed towards queries like fetching video stats.

Examples:

- A record in the

Video Tablemay relate to a specificvideo_id, storing which shard holds the corresponding video file. - The

Users Tablemight employ consistent hashing based on theuser_idto distribute records across database instances. - With the

Video Stats Table, a sharding strategy usingvideo_idfacilitates join operations with theVideo Table. - Replication of the

Comments Tablecould be implemented to serve hot data faster in different geographical locations while offering enhanced protection against data loss.

Deep Dives

Video Transcoding

Video transcoding is the process of converting a video file from one format to another, adjusting various parameters such as the codec, bitrate, and resolution to ensure compatibility across a wide range of devices and internet connection speeds. This process is crucial for platforms like YouTube, where videos need to be accessible on everything from high-end desktops to mobile phones with limited bandwidth. Transcoding makes it possible to provide an optimal viewing experience regardless of the viewer's device or network conditions, by dynamically serving different versions of the video tailored to their specific situation.

The necessity of video transcoding for YouTube arises from the diverse array of content uploaded to the platform. Videos come in from creators around the world, encoded in various formats and qualities. To standardize this content for playback on the YouTube platform—and to ensure that every user, regardless of their device or internet speed, can watch videos without excessive buffering or data usage—transcoding is a must. It allows YouTube to convert these myriad formats into a consistent set of standardized formats. For instance, a high-resolution video might be transcoded into lower resolutions (1080p, 720p, 480p, etc.) to accommodate users with slower internet connections, while still being available in its original high definition for those with the bandwidth to support it.

Video Processing Pipeline

Grasping the building blocks ("the lego pieces")

This part of the guide will focus on the various components that are often used to construct a system (the building blocks), and the design templates that provide a framework for structuring these blocks.

Core Building blocks

At the bare minimum you should know the core building blocks of system design

- Scaling stateless services with load balancing

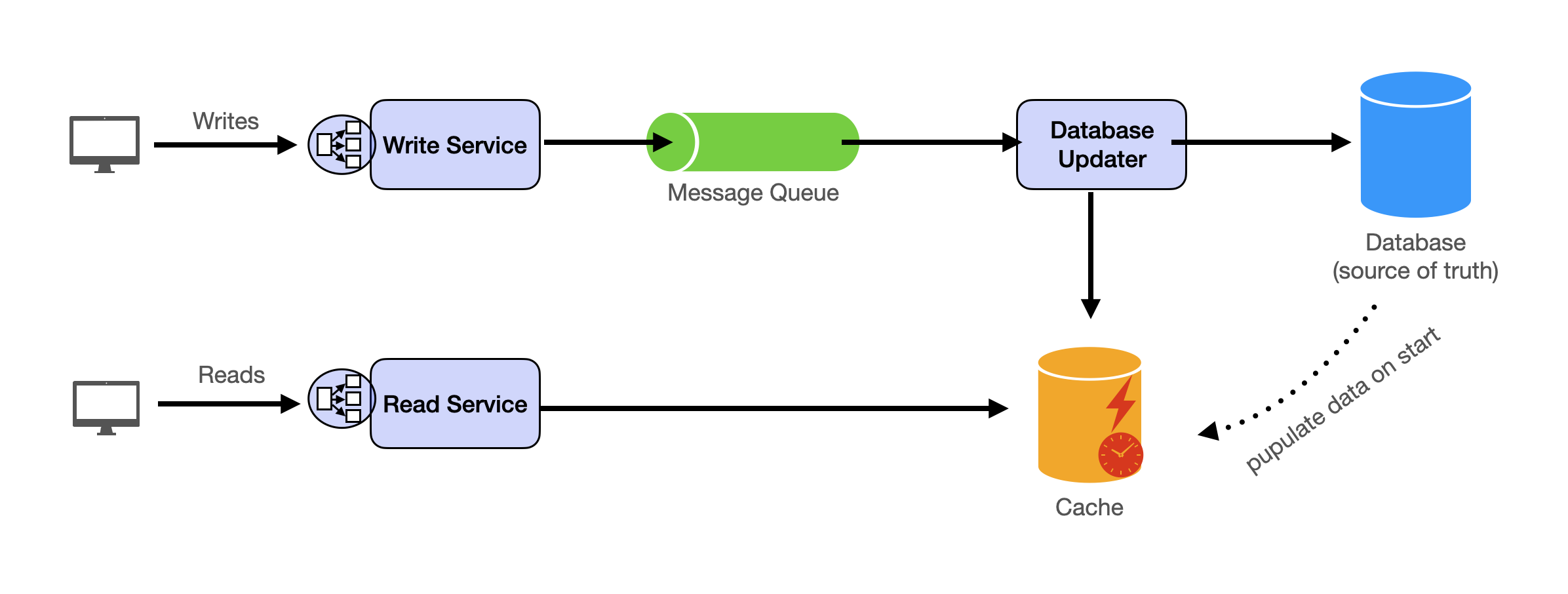

- Scaling database reads with replication and caching

- Scaling database writes with partition (aka sharding)

- Scaling data flow with message queues

System Design Template

With these building blocks, you will be able to apply our template to solve many system design problems. We will dive into the details in the Design Template section. Here’s a sneak peak:

Additional Building Blocks

Additionally, you will want to understand these concepts

- Processing large amount of data (aka “big data”) with batch and stream processing

- Particularly useful for solving data-intensive problems such as designing an analytics app

- Achieving consistency across services using distribution transaction or event sourcing

- Particularly useful for solving problems that require strict transactions such as designing financial apps

- Full text search: full-text index

- Storing data for the long term: data warehousing

On top of these, there are ad hoc knowledge you would want to know tailored to certain problems. For example, geohashing for designing location-based services like Yelp or Uber, operational transform to solve problems like designing Google Doc. You can learn these these on a case-by-case basis. System design interviews are supposed to test your general design skills and not specific knowledge.

Working through problems and building solutions using the building blocks

Finally, we have a series of practical problems for you to work through. You can find the problem in /problems. This hands-on practice will not only help you apply the principles learned but will also enhance your understanding of how to use the building blocks to construct effective solutions. The list of questions grow. We are actively adding more questions to the list.