Introduction

We will use the top k most played songs as an example. In a music streaming service like Spotify, every time a user plays a song, it gets recorded. We want to find out the top k most played songs.

Typically, we cover the top three functional requirements in system design questions. However, in this problem, there is only one core functional requirement but it is quite complex. Therefore, we will use the functionalRequirements section to start with the simple case of only all-time top k songs and then gradually add more complexity.

Background

Global, Tumbling or Sliding Window

In a typical stream processing system, we need to group events by time windows. There are four types of windows: tumbling window, sliding window, session window, and global window. You can learn more about them in Stream Processing section.

Global window is a window that covers the entire period of data. For example, if we set up a global window, all events will be in the same window regardless of time. In the context of this problem, global window means the top K songs of all time.

Timeline: 0 5 10 15 20 25 30

Events: e1 e2 e3 e4 e5 e6 e7

Global Win: [---------------------------------------]

Tumbling window is a fixed size window that does not slide. Events in the same window are not overlapping and set by a start and end time. For example, if we set up a tumbling window of 10 minutes, all events in the last 10 minutes will be in the same window. At 10:00:00, the window starts and at 10:10:00, the window ends. And the next window will start at 10:10:00 and end at 10:20:00. In the context of this problem, tumbling window means top K songs at predefined time intervals.

Timeline: 0 5 10 15 20 25 30

Events: e1 e2 e3 e4 e5 e6 e7

Tumbling Win: [----W1----][----W2----][---W3---]

e1 e2 e3 e4 e5 e6 e7

Sliding window is a dynamic window that slides over time. For example, if we set up a sliding window of 10 minutes with a slide interval of 1 minute, all events in the last 10 minutes will be in the same window, but every minute, the window will slide forward by 1 minute. At 10:00:00, the window starts and at 10:10:00, the window ends. At 10:01:00, the window will slide forward to 10:11:00 and so on.

Now there is another question we need to answer about sliding window: is the slide interval fixed or can it be arbitrary?

- In a fixed sliding window, the window size and slide interval are predefined. Events are bucketed along a fixed time axis, meaning the windows open and close at fixed intervals, regardless of when events arrive. They are like tumbling windows except that the windows may overlap.

Timeline: 0 5 10 15 20 25 30

Events: e1 e2 e3 e4 e5 e6 e7

Fixed Win: [----W1----]

[----W2----]

[----W3----]

- In a arbitrary sliding window, the slide interval is not fixed. The window size is predefined. Events are bucketed along a dynamic time axis, meaning the windows open and close based on when events arrive.

Events: e1 e2 e3 e4 e5

Times: 12s 15s 18s 21s 25s

Arbitrary Win: [12s, 22s)

[15s, 25s)

[18s, 28s)

Which type of window to use?

Obviously, if you encounter this problem in an interview, you should clarify with the interviewer which type of sliding window they are asking for.

In the context of the Top K problem, depending on the scenario, we can choose different types of windows. In the real world, a tumbling window of 24 hours is a good choice. For example, Spotify's Top Songs Playlist is updated every 24 hours. The same is likely true for Amazon's Top products per day, YouTube's Top videos etc. Consumers are unlikely care about the top songs or videos of down to the minute or even hour.

However, if the interviewer insists on a more complex scenario, such as the top K songs in the last X minutes using a sliding window, we still need to be able to handle it.

How Top K Would Be Implemented in Production

Before we begin designing the system, let's answer a commonly asked question - can't we just use Flink to implement the top K aggregator in a sliding window?

Stream Processor Implementation

Yes, we can and it's only a few lines of code. In production, we could use a stream processor like Apache Flink, Spark Streaming, Kafka Streams, Google Cloud Dataflow, AWS Kinesis, Azure Stream Analytics, or whatever favorite stream processor to implement the top K aggregator. It's a very popular technology and there are many providers out there.

The typical data flow in a stream processor is to read data from a stream (Kafka, Pulsar, Kinesis, etc.), apply transformations and aggregations, and write the result to a stream (Kafka, Pulsar, Kinesis, etc.).

We would write MapReduce style code to:

- Apply transformations (filter, map, aggregate, join, etc.).

- Group or partition the data based on keys (e.g., by user_id or item_id).

- Use windowing logic (tumbling, sliding, or custom windows).

For example, in Flink, we can use .window(SlidingEventTimeWindows.of(Time.minutes(10), Time.minutes(1))) to set up a sliding window of 10 minutes with a slide interval of 1 minute and write a custom ProcessWindowFunction to compute the top K items within the window.

Here's a sample implementation of the top K aggregator in a sliding window in Flink.

Redis Sorted Set Implementation

If the question asks for the top K songs in a Global window, we have an even simpler implementation using Redis's sorted set (ZSET). A sorted set is a data structure that stores elements with a score. Elements are automatically ordered by their score in ascending order. Internally, it's implemented as a hash map and a skip list. The time complexity of the basic operations (add ZADD, remove ZREM) is O(log n). To find the top K elements, we can use ZREVRANGE which has a time complexity of O(log n + K).

Now that we have a good understanding of the background windowing types and how top K would be implemented in production using pre-built technologies, let's move on to the designing the system from scratch.

Functional Requirements

-

The system should allow users to view the top k most played songs of all time. The traffic is limited so that a single instance can handle it.

-

The system should allow users to view the top k most played songs of all time. The traffic is high so that we need to scale out the system.

-

The system should allow users to view the top k most played songs in the last X time in a sliding window.

Scale Requirements

- There are 10 billion song plays per day.

- There are 100 millions of songs.

- The top K songs API is accessed 1 million times per day.

Kis ~100-1000.

Non-Functional Requirements

- Low latency, ideally real time.

- High accuracy

API Endpoints

/top-items?k=100&time_window=1dReturn the top k most played songs in the last time window (1 day in this case). The time window is optional and defaults to all time if not specified. The response is a list of items with their IDs and play counts.

High Level Design

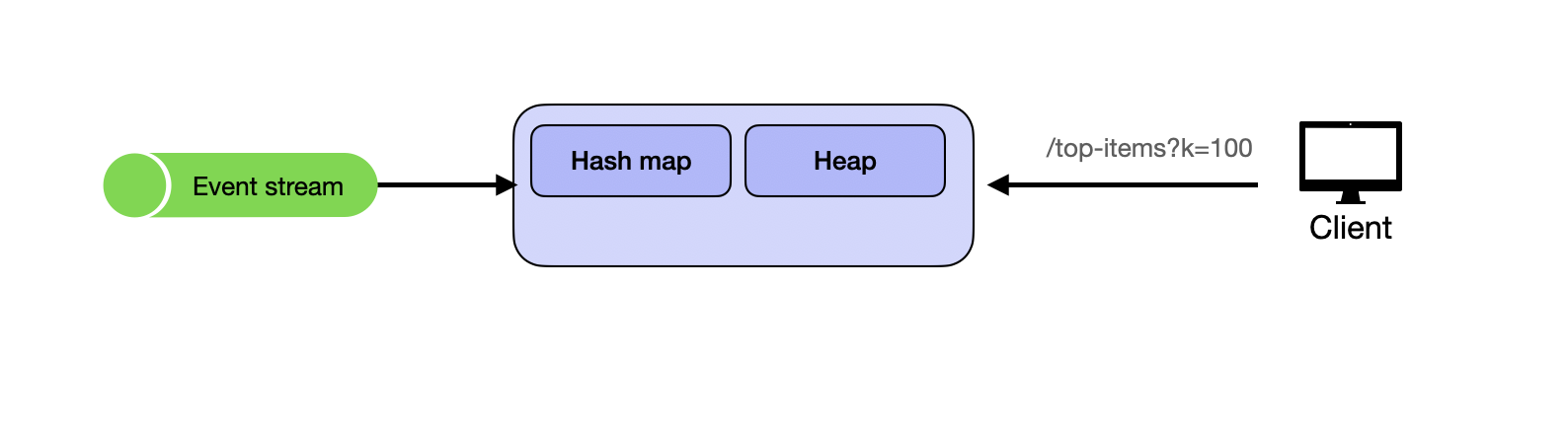

1. Global Window Top K, Low QPS

The system should allow users to view the top k most played songs of all time. The traffic is limited so that a single instance can handle it.

Data Structure

If you are relatively familiar with algorithms and data structures, the "Top K" in the question should scream Heap to you. Indeed, a heap is the most efficient way to find the top K elements, in particular, a min heap.

Here's a quick recap of how a min heap works: We can use a min heap to keep track of the top K elements. As new elements come in through the stream, we compare the current element with the root of the heap. If the current element is greater than the root, we replace the root with the current element and adjust the heap.

The head of the heap will always hold the least viewed video among the Top-K. Alongside the heap, we maintain a hash map to store the view counts and track the heap index for each video, ensuring efficient updates.

Here's how the data structure looks like:

- Heap: Stores

(VideoID, ViewCount)tuples, where the smallest element is at the root.

Heap: [(video1, 5), (video2, 3), (video3, 8)]

- Hash map: Stores

(VideoID, HeapIndex)pairs.

{ video1: (index: 0, count: 5), video2: (index: 1, count: 3), video3: (index: 2, count: 8) }

Why Store Heap Index in Hash Map?

When a new event arrives (e.g., event(videoId_X, viewCountsTotal_Y)), if the video already exists in the heap:

- We need to update its count.

- After updating the count, the element may no longer maintain the heap property, so we need to reheapify (bubble up or bubble down).

Without knowing the element’s current position in the heap, we would need to search the entire heap to locate it. This would take O(K) time (where K is the size of the heap), which is inefficient. By storing the heap index in the hash map, we can directly access the element’s position in O(1) time. This allows us to quickly modify the element’s count and perform the reheapify operation (in O(log K) time). For songs that are not in the top K heap, we can simply not store any index in the hash map to save space since the number of songs is much larger than K.

Algorithm to Maintain Top-K Videos

When a new event arrives, we first update the view count in the hash map. Then we check if the video is already in the heap.

Processing Logic:

- If

ViewCountis smaller than the head of the heap, discard the event since it won't affect the Top-K. - Otherwise, check if

VideoIDexists in the hash map:- If the video is already in the heap:

- Update the count in the hash map.

- Re-heapify by performing a bubble-down operation, where the element swaps with its children until the heap property is restored.

- If the video is not in the heap:

- Replace the head of the heap with the new video.

- Perform a sift-down operation to maintain the heap property.

- If the video is already in the heap:

Now this is pretty standard stuff with a min heap. At this point, it's really a DSA question. Our design needs to address the following issues:

- Scalability: The service operates as a single instance and keeps the min-heap in memory. It won't be able to handle 120k views/second, so we need a way to scale the service.

- Fault Tolerance: If the service crashes or is redeployed, the in-memory min-heap will be lost. We need a mechanism to persist the heap for recovery, avoiding the need to replay all events, which would be too slow.

- Query Flexibility: The service only supports queries for all-time top-K videos. It needs to be extended to support querying top-K for specific time windows (e.g., 1 hour, 1 day, or 1 month).

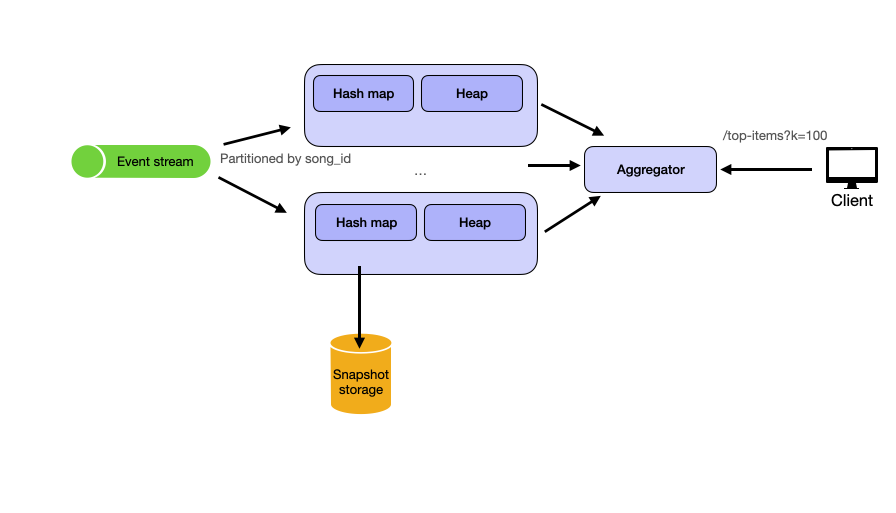

2. Global Window Top K, High QPS

The system should allow users to view the top k most played songs of all time. The traffic is high so that we need to scale out the system.

Let's address the first two issues in this section.

To Increase Throughput

The obvious solution to increase throughput is to partition the service to multiple instances. One design decision is how to map the event stream to the backend instances.

Partitioning Schemes

Round-Robin

Round-robin partitioning distributes events evenly across partitions.

How it works:

Events are distributed evenly across partitions in a round-robin fashion to backend instances.

Pros:

- Provides even load distribution.

- Simple to implement without coordination.

Cons:

- Can lead to inconsistencies, as events for the same song may be processed by different instances. We need to keep more than top K songs in each partition because a song may not be in the top K in one partition but is in the top K in overall count when aggregated across all partitions.

Conclusion:

Suitable with caveats.

To keep things simple, we'll use the fixed hash partitioning scheme.

-

Partition the event stream by song_id: Each backend instance processes events for a specific partition based on the song ID. This ensures that all events for a given song are routed to the same instance, avoiding inconsistencies when updating the heap.

-

Aggregate the top-K from each instance: After each instance processes events and maintains its local top-K heap, a coordinator service aggregates the top-K results from all instances to produce the final overall top-K.

To Increase Reliability

If an instance fails or crashes, its in-memory data structures (heap and hash map) are lost, so we need a mechanism for data recovery. We can persist the heap and stream offset as a snapshot to a remote datastore (e.g., Redis, DynamoDB, or cloud storage). This ensures that data can be recovered in case of a failure.

Each snapshot includes:

- Heap Data: The current state of the min-heap.

- Stream Offset: The position in the event stream from which events have already been processed.

On server crash, we can reconstruct the heap from the latest snapshot. It will then replay events from the stored stream offset to catch up with any missed events and restore the correct state.

If no snapshot is available for a given backend, the server falls back to replay events from the beginning of the stream to ensure consistency after a crash or restart.

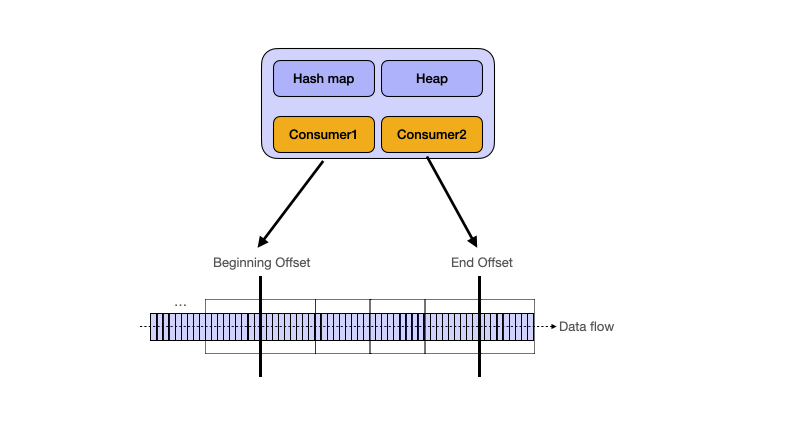

3. Sliding Window Top K, High QPS

The system should allow users to view the top k most played songs in the last X time in a sliding window.

Now let's tackle the sliding window version of the problem. A sliding window is tricky because it requires us to maintain a dynamic set of data. Adding data is easy, but how do we remove data that is no longer in the window?

Again if you are relatively familiar with algorithms and data structures, sliding window problems can be commonly solved using the Two Pointers Technique. We can borrow the idea of two pointers in our design.

In stream processing systems like Kafka, RabbitMQ, or Pulsar, an offset is a unique identifier that represents a position within a partition of a stream. It allows stream processors to track which messages have been processed and where to resume if interrupted. We can use two separate consumers per instance each maintaining a different offset to implement the sliding window.

- Beginning Offset: Marks the start of the time window.

- End Offset: Marks the most recent event processed.

We can calculate the top K songs in the current window by taking the difference between the end offset and beginning offset.

Advancing Offsets

As new events arrive, we maintain two offsets within each time window:

-

End Offset (for new events)

- Tracks the most recent event processed

- Ensures all new view events entering the time window are applied to the heap

- Example: Current timestamp (

now)

-

Beginning Offset (for expiring events)

- Tracks the oldest event that is still relevant in the sliding window

- Slides forward to remove expired events from the heap

- Example:

now - window_size(e.g.,now - 1hfor a 1-hour window)

Processing Events

For each time window update:

-

For the end offset (recent events):

- Process new events using the same heap logic as before

- Add new song plays to the counts

-

For the beginning offset (expired events):

- Do the reverse operation to remove expired events from the heap

- Ensure

timestamp(beginning + offset) <= now - window_size - This efficiently removes stale events from the window

Deep Dive Questions

Grasping the building blocks ("the lego pieces")

This part of the guide will focus on the various components that are often used to construct a system (the building blocks), and the design templates that provide a framework for structuring these blocks.

Core Building blocks

At the bare minimum you should know the core building blocks of system design

- Scaling stateless services with load balancing

- Scaling database reads with replication and caching

- Scaling database writes with partition (aka sharding)

- Scaling data flow with message queues

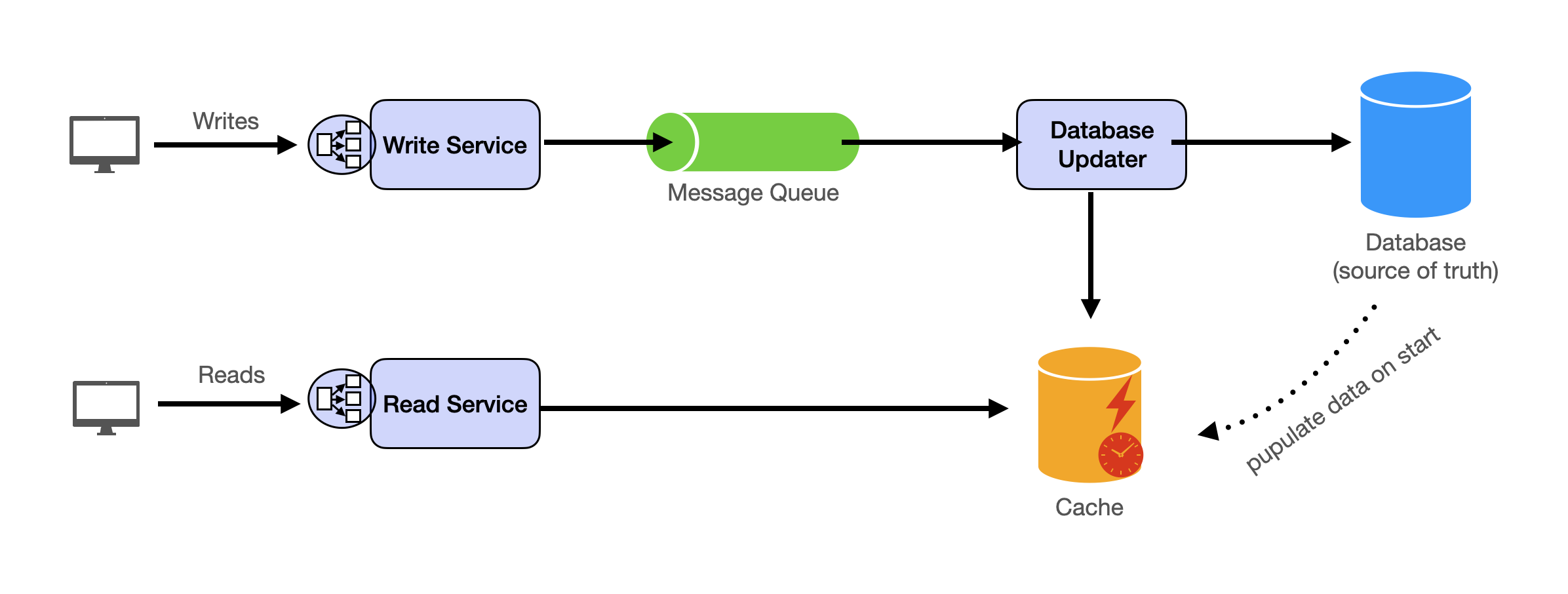

System Design Template

With these building blocks, you will be able to apply our template to solve many system design problems. We will dive into the details in the Design Template section. Here’s a sneak peak:

Additional Building Blocks

Additionally, you will want to understand these concepts

- Processing large amount of data (aka “big data”) with batch and stream processing

- Particularly useful for solving data-intensive problems such as designing an analytics app

- Achieving consistency across services using distribution transaction or event sourcing

- Particularly useful for solving problems that require strict transactions such as designing financial apps

- Full text search: full-text index

- Storing data for the long term: data warehousing

On top of these, there are ad hoc knowledge you would want to know tailored to certain problems. For example, geohashing for designing location-based services like Yelp or Uber, operational transform to solve problems like designing Google Doc. You can learn these these on a case-by-case basis. System design interviews are supposed to test your general design skills and not specific knowledge.

Working through problems and building solutions using the building blocks

Finally, we have a series of practical problems for you to work through. You can find the problem in /problems. This hands-on practice will not only help you apply the principles learned but will also enhance your understanding of how to use the building blocks to construct effective solutions. The list of questions grow. We are actively adding more questions to the list.